Computer Architecture, Digital Logic and Low-Level Programming for T-Level and A-Level Computer Scientists.

This is a free sample of the introduction to give you a flavour of the style. This self-published book is available in print and as an e-book through Amazon Kindle (available for free with Kindle Unlimited).

Introduction

Computer Architecture is a fascinating subject and provides an incredible insight into the most pervasive technology ever created by the human race, perhaps with the exception of the wheel. It was the subject of my MSc research at the University of York (2011-2013). My passion for this subject had been rekindled by my teaching work at York College and I'd like to share some of that with you in this book. This is not a normal sort of textbook; there are historical notes, personal anecdotes and random thoughts to spice up the mix amidst the factual information.

It is easy to forget that electronic computers are a relatively recent phenomenon. Colossus, the code-breaking machine built in secret during WWII, came into service at Bletchley park in January 1944; less than three months before my Dad was born. He is now walking around with a smartphone in his pocket that has millions of times more computing power than that first, all electronic computer (which filled a good-sized room and would have probably run for about half a second on batteries).

This work was prompted by some very encouraging feedback from my own A-level and vocational students, who have told me over the years how they enjoy the anecdotes and random facts about computer history that I throw into my lessons. I apologise to those who requested a podcast, but I hope an e-book is an agreeable substitute. The final push came via a couple of articles I wrote for the British Computer Society's "IT Now" magazine, the first of which you can read here. The second article, " The First Computers on the Moon", is included as Chapter 11 of the book.

Humans thrive on stories: our brains seem hard-wired to find a narrative in everything we experience; so wherever possible, I have included stories about computer pioneers, experimental machines, interesting technologies and my own experiences throughout the four decades I have been working (and playing) with computers.

I would like to dedicate this book to all the students who have sat patiently over the last 20 years and listened to me ramble on about one of my favourite subjects and to my Dad, who not only built the first computer I ever programmed (more about that later) but also for proofreading the chapters (as he did with my MSc Thesis). I hope you found this a little easier going than my thesis, Dad.

A Brief History of Computing

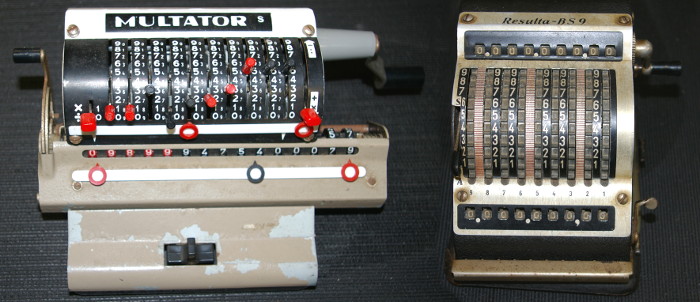

As I began to explain, the history of electronic computers is very short; in fact, it is the same as a single human lifetime. Calculating machines have a much longer history: the mathematician Blaise Pascal invented the first mechanical calculator [1] in the mid-seventeenth century. The Antikythera mechanism (a machine that accurately modelled the movements of the Moon and planets) is around 2,000 years old, and who knows, perhaps Stone Henge was a prehistoric calendar app? It turns out to be the first "portable computer", as it now seems clear that about forty of the so-called blue stones were moved 150 miles from the Preseli hills in Pembrokeshire, west Wales [2] to their final home on Salisbury plain.

The first mechanical "computer" was designed (sadly never actually built) by the mathematician Charles Babbage in around 1837. Prior to that, he had designed and partially built his Difference Engine, which was more like a programmable calculator- you can see a working version in the Science Museum in London [3]. It is a fantastic feat of engineering.

While Babbage never built his computer (Analytical Engine), his friend Ada Augusta King, the countess of Lovelace [4], more commonly known now as Ada Lovelace, saw its great potential. Ada was a keen mathematician (not considered a fitting pursuit for a Victorian Lady) and as headstrong as her estranged father, the poet Lord Byron, so she found a way to pursue her dream.

Ada wrote detailed documentation for Babbage's invention and even devised programs for it, making her the world's first programmer - a fact commemorated by the fourth generation language Ada, which I used at University; and more recently Ada Lovelace Day, a rapidly growing, worldwide event aimed at promoting STEM subjects for female students who are still very much under-represented in the field.

What I find most impressive about Ada's contribution is that she saw beyond the mathematical applications of the analytical engine, realising that anything that could be numerically encoded could be handled by the machine, such as music. What an incredible insight to spot that such abstractions would be possible, and of course, now we think nothing of composing, recording and playing music by computer amongst all the other things they can do.

One of the pioneers of electronic music, Laurie Spiegel (born just 18 months after my Dad), worked for Bell Labs in the early 1970s and learned to program on their mainframe computers. One of her compositions was included on the "golden disc" that was sent into deep space attached to the first Voyager probe in 1977. She currently lives in New York and is one of many pioneering electronic musicians featured in the film Sisters with Transistors, that was released in the spring of 2021.

Unfortunately, Charles Babbage was a bit of a difficult man to work with and fell out with everyone who could have helped realise his machine, eventually running out of money and dying a pauper. Ada had died almost 20 years earlier at the tender age of 36, not the only computing pioneer to die so tragically young. However, it would take almost a hundred years and the advent of electronics to see his and Ada's vision become a reality.

The age of electronics began in 1904 with the invention of the thermionic valve [5] (called a vacuum tube in the USA). However, it would be another 40 years before a computer was built entirely from valves. Some experimental machines were built in the early 1940s using electromechanical/electromagnetic relays; the first being the Z3 built by German engineer Konrad Zuse [6]. Three years later, the Harvard Mark-1 went into operation [7] using similar technology (see Harvard Architecture in Chapter 3).

At this point, I need to reverse slightly to 1936 when another young mathematician (based at Cambridge University) effectively reinvented the computer. Alan Turing's paper "On computable numbers, with an application to the Entscheidungsproblem" [8] described a theoretical machine that could automatically carry out computations (now known as a Turing Machine). This paper is the part of Turing's (short) life that seems to get missed out of the movies but could be his greatest moment.

A Turing Machine is described as a device with a "tape" that contains symbols (zeros and ones) which can be read from the tape and (if required) changed. The tape can also be moved left and right in order to present the next symbol to the read/write head. If you've ever seen an old reel-to-reel tape machine then this will sound familiar. But remember, it was never actually built. It was a purely theoretical machine that only existed on paper to help Turing prove a point!

You will see the "tape" in a Turning Machine described as "finite but unbounded", which sounds like an oxymoron. Mind you, I find that idea more comfortable than infinity. When we get onto virtual memory in Chapter 8, this idea of a finite but unbounded store may start to resonate again.

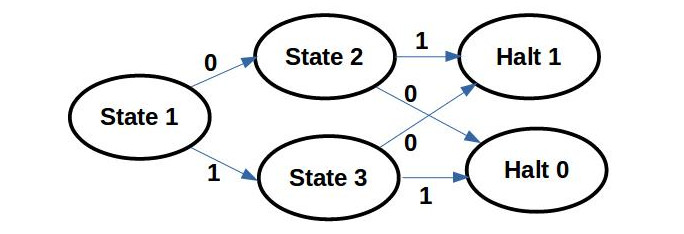

A Turing Machine consists of a collection of "State Tables", each of which describes the machine's behaviour in response to reading a particular symbol. That behaviour could be: move the tape and/or write a new symbol and/or switch to a new state. A simple machine could have one state as follows:

If input = 1 then write 0 and move tape right (one step)

If input = 0 then write 1 and move tape right (one step)

If input = blank then halt

This state machine would take a binary number and turn it into the ones' complement, i.e. invert (or flip) all the bits. A slightly more complicated machine could use three states to carry out a logical operation:

State 1:

If input = 1 then write blank, move tape right and go to State 3

If input = 0 then write blank, move tape right and go to State 2

State 2:

If input = 1 then write 1 and halt

If input = 0 then write 0 and halt

State 3:

If input = 1 then write 0 and halt

If input = 0 then write 1 and halt

This machine will take a two-digit input and leave a "1" on the tape if the two digits are different or leave a "0" on the tape if they are the same. This is the same as an XOR logic function, which we will come back to in Chapter 5. This could also be drawn as a finite state machine diagram:

Another way to draw this would be to have two concentric circles for the halt states, which are referred to as accepting states. In other words, there has been a recognised pattern/stream of ones and zeros which the machine has accepted as "correct".

Written as a transition table (only covered by the AQA exam), the finite state machine shown above would look something like this:

| Current State | S1 | S1 | S2 | S2 | S3 | S3 | S4 | S5 |

| Input Symbol | 0 | 1 | 0 | 1 | 0 | 1 | X | X |

| Next State | S2 | S3 | S5 | S4 | S4 | S5 | H | H |

It turns out that a computer is just an example of a Finite State Machine. It just has a vast number of possible states. For example, a simple set of four 8-bit registers has over four billion possible states. Think about it, all the registers could hold 00000000, or they could all hold 11111111 or any permutation of ones and zeros in between (Chapter 7 will cover binary numbers in detail).

In 1937, Turing was given a post at Princeton University (USA), having received a personal recommendation from respected mathematician John von Neumann [9] (pronounced noy-man rather than new-man). Von Neumann had already made a name for himself with his "Game Theory" work and his contribution to the development of the atomic bomb (the Manhattan Project). However, we tend to remember him more favourably for his pioneering computing work and in fact the most common computer architecture in use today bears his name (see von Neumann Architecture in Chapter 2).

The late 1940s saw an explosion of experimental computing machines [10] (some of the early ones referred to as automatic calculators): ENIAC in the USA, EDSAC in the UK (Cambridge) and the Manchester "Baby" or Small Scale Experimental Machine (SSEM) in 1948. All very much worth investigating further.

Colossus was a top-secret war project and remained a secret until the 1970s, so for all that time, ENIAC was assumed to be the first all-electronic computer. Although the Manchester machine was the first stored-program machine unlike the earlier machines that were physically rewired to program them. The SSEM stored its program instructions in memory, i.e. software. This machine was actually built to test an idea for a memory technology based on cathode ray tubes - the old TV screens - called Williams Tubes.

It should be noted that EDSAC came into operation in May 1949 at the University of Cambridge (see Appendix 3 for more information) and is now regarded as the first practical general-purpose stored-program electronic computer based on von Neumann's ideas. This machine led directly to the development of the LEO (Lyon's Electronic Office) computer, which ran the world's first routine office computer jobs starting in 1951. In 2022, a reconstructed EDSAC came into operation at the National Museum of Computing.

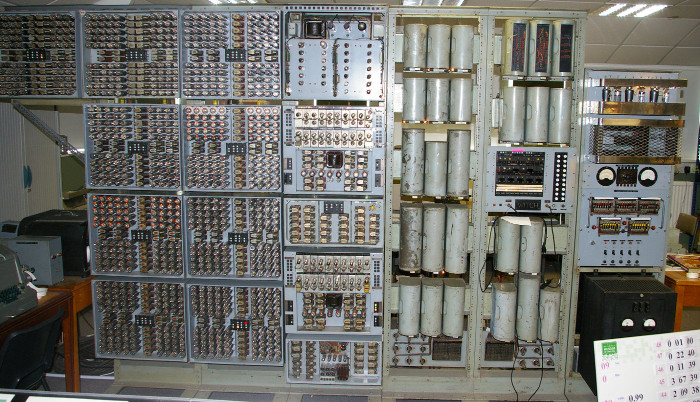

The photo below shows an example of an experimental valve-based computer (still in working order) which can be seen at the National Museum of Computing. I took this during a visit to Bletchley Park in 2012. The Harwell Dekatron (or WITCH) is an unusual computer because it worked in base-10 (denary) rather than base-2 (binary), more on that in Chapter 7. You can find out more about this machine on the National Museum of Computing website.

In 1950, Turing (by then based at Manchester University) published his famous paper "Computing machines and intelligence" [11], in which he proposed his "Imitation Game", now referred to as the Turing Test. It is interesting to see the terminology he used; like Babbage he refers to the memory as the store and he talks of an "Executive unit" which may be the CPU (or the Arithmetic and Logic Unit - see Chapter 1). There are references to "Control", which may mean the operating system if not the control unit of the CPU. He also uses the terms "Universal machine", "Discrete state machine", and "Digital computer" synonymously. While there is no mention of bits or bytes, he does talk about binary digits and refers to machines being programmed.

What really caught my eye was his prediction that by the year 2000, computers would have 108 digits of storage, which equates to roughly 12MB. That year my desktop PC at work was 256MB though 128MB was more typical at that time. Not a bad guess given how technology changed over those fifty years; after all, they thought we'd be living on the Moon by the turn of the new millennium.

In 1951, the "world's first commercial data-processing computer", UNIVAC (Universal Automatic Computer), was sold to the US Census Bureau [12] (a few days after Dad's seventh birthday). It wasn't actually delivered until 1952, a hundred years after the death of Ada Lovelace. According to the Guinness World Records, LEO was the "first business computer in the world", the same year as UNIVAC.

One interesting story is that a UNIVAC machine was used to predict the 1952 presidential election and reportedly made a more accurate prediction than any of the human political pundits. At which point the world went computer mad, though it would be another 30 years before they became a common sight in offices and schools.

In total, 46 of these UNIVAC machines were sold, but with a price tag of just over $1 million, they were only affordable to governments and large corporations.

The invention of the transistor [13] in late 1947 led to the second generation of computers in the 1960s. They were smaller, faster, more reliable and most importantly, cheaper than the first generation (valve-based) computers. However, this new generation was quite short-lived, lasting about six years. Transistors are most fondly remembered for the small, cheap, portable transistor radios of the sixties. In fact, to Dad's generation, the word transistor was used to mean a small radio or wireless set, as my Grandfather would have called it. It is funny how we have adopted the term wireless again, now used to describe computers and other portable devices that use Wi-Fi connections instead of Ethernet cables.

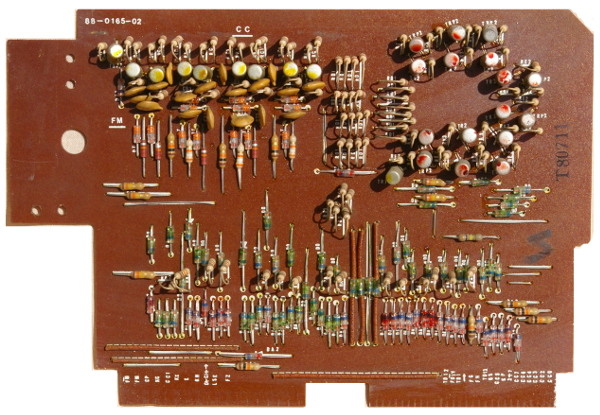

This next photo shows a transistor logic board from a Canon (Canola 130) electronic calculator, which was about the size of a small desktop PC. This model was sold in the late 1960s and cost about £400. By way of comparison, a 1965 new Mini (car) cost £470.

The collection of three-metre high metal cabinets in the photo below is a Marconi Transistorised Automatic Computer or TAC. It was one of a pair that ran from 1966 to 2006 at Wylfa Power Station on Anglesey; now an exhibit in the Jim Austin Computer collection [14]. This photograph was taken just after we wheeled them into one of Jim's sheds. Most computers these days aren't in use for more than five years, let alone forty.

The next revolution was the invention of the integrated circuit [15] by Jack Kilby of Texas Instruments in 1958; although it was Robert Noyce, an engineer at Fairchild semiconductors, who pioneered the use of silicon, hence they became known as silicon chips. Both Kilby and Noyce began their experiments using the semi-conductor germanium instead of silicon. Silicon has two advantages - being less affected by temperature and much cheaper, as it is essentially sand.

Another interesting fact is that the first computer to be designed using all silicon chips was the Apollo Guidance Computer (AGC) [16] which was created for the Apollo Moon landing missions of the late 1960s and early 1970s. The software development team was led by Margaret Hamilton, who is widely recognised as the first person to use the term Software Engineering. It is a fascinating story that I'll refer back to in Chapter 6, which explores logic gates.

In 1971 (the year after Dad's first son was born, i.e. me), the world's first microprocessor was born on November 15. Using integrated circuit technology, Intel managed to build an entire central processing unit on a single piece of silicon. The Intel 4004 [17] was only a 4-bit CPU, but it was the start of a family of processors (via the 8086 and 80286) that now populate the majority of laptop, desktop and server PCs in the world. The 4004 had 2,300 transistors, comparable to the 2,500 valves of Colossus mark II 25 years earlier.

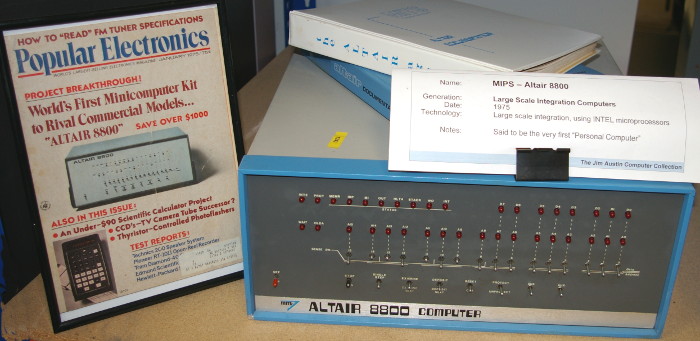

In 1976 the first "home computer" appeared in the USA; it was called the Altair [18] and used an Intel 8086 CPU. It cost around $400 for the kit; the one pictured here is from Jim's collection and can also be seen in the previous photo to give you an idea of scale (just next to my right foot).

It was not a particularly useful or user-friendly computer, but it caught people's imagination. Steve Wozniak, co-founder of Apple, couldn't afford one, so he built his own home computer, which was the prototype Apple-1. As they say, necessity is the mother of invention.

The UK home computer revolution started in 1979 [19] when these machines became affordable. Names like Acorn and Sinclair (both based in Cambridge, the home of EDSAC) became synonymous with home computing; other popular machines came from US companies such as Commodore and Atari (one of the first companies to make home video games in the mid-seventies). Sinclair produced the first UK home computer to be sold for under £200, and their ZX Spectrum machine became the best-selling home computer in the UK; I believe more than four million were sold.

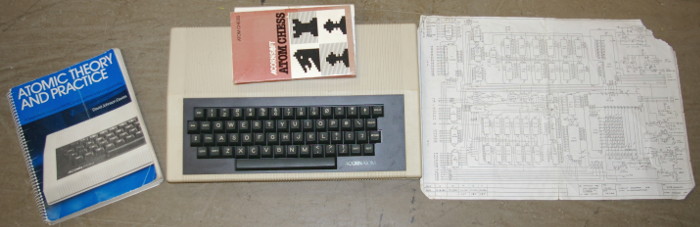

In 1981 Dad bought an Acorn Atom computer kit - a PCB onto which he soldered the various integrated circuits and passive components over the course of a couple of months. That was the moment I got hooked, so once again, thank you Dad for being the computer pioneer of our family. That very machine is now part of the Jim Austin computer collection, complete with the circuit diagram (right) with Dad's hand-written notes on it and the chess game he bought on cassette tape, all shown in this photo:

There is an interesting story about a small research team at Los Alamos writing a simple chess program for the MANIAC (Mathematical Analyser, Numerical Integrator, and Computer) in 1956. This machine was designed by John von Neumann and his colleague Nicholas Metropolis. Von Neumann had previously described chess as a "zero-sum game" (a game where a player can only win by causing the other to lose) in his pioneering work on game theory.

This was not a fully functional chess program as it did not use bishops and had fewer squares (6x6) than a normal chess board due to the lack of computing power (11,000 IPS). The program was only able to look a few moves ahead, using a brute force approach (taking 12 minutes per move) and could be beaten by any half-decent chess player.

Dad later upgraded his Acorn Atom machine with an EPROM (erasable and programmable read-only memory) which contained the software for a simple word processing application, software we now take for granted.

That same year the IBM PC [20] arrived on the scene, and the rest, as they say, is history. I must also acknowledge the arrival in 1984 of the Apple Macintosh [21], the first widely available GUI-based computer complete with a mouse and even a laser printer. For about £10,000, you could buy a Mac, optional 20MB hard drive, laser printer and desktop publishing software - for about twice that price you could buy a two-bedroomed terraced house in the Nottinghamshire town where I grew up.

You might be surprised to learn that the concept of the GUI was invented by Xerox (yes, the photocopier people) in 1969 and in 1973 they released the first GUI-based computer, the Xerox Alto [22] which cost between $30,000 and $40,000. A complete system with a laser printer cost in the region of $100,000, which makes the 1984 Apple Mac sound like a bargain.

If you are interested in computer history, there are plenty of great online resources. You should also try to visit the National Computer Museum in Milton Keynes to see the reconstructed Colossus and the Manchester Museum of Science and Industry to see the reconstructed "Baby" - both are fully working and generate a disturbing amount of heat from all the valves.

I'd like to take a moment here to thank my good friend and former MSc supervisor, Prof. Jim Austin (see next photo), for not only giving me access to his amazing computer collection for many of the photos I've used, but also for critically reviewing the first draft of this book (and reminding me of some important aspects of computer history that I had over-looked). You can view some of his vast collection online. You should also look out for: "Collectaholics" series 2 on the BBC which features his collection.

I have organised the chapters in roughly the same order I deliver my teaching as it seems to make for a logical flow. I will emphasise the points that you need to know for the exam, which I have based on the specifications for the AQA and OCR A-level exams, the Pearson T-Level and BTEC Level-3 Computer Science unit. There will be additional information that goes beyond the scope of these exams, which I have included for completeness and to help you connect the dots and make sense of the broader subject. I will also suggest further reading, other research activities and practical exercises to reinforce your learning.

I hope you find all of this engaging, useful and that it helps you achieve the grade that you are aiming for in your final exams. Above all, enjoy this journey; maybe it will inspire you to join one of the most exciting industries on the planet. Think how far computers have come in my Dad's lifetime and if Moore's Law continues, then all of that power is likely to double again during your two-year course. Then, just try to imagine how much that technology is going to change over your 40+ years in the industry.

I have made the copyright-free diagrams, images and photos available via this website, which you can access here for any teachers/tutors who would like to make use of them in their own teaching and learning resources.

As I reflect on the fiftieth-anniversary celebrations of the Department of Computer Science [23] at the University of York, I am reminded of what a new, exciting and fast-changing discipline this is. Just 50 years ago, it took visionaries like the team at York to define what the subject of computer science should encompass and develop those concepts into a highly successful programme, from BSc to PhD.

My choice of university place was influenced by two factors - the course and the campus (built around a very picturesque lake). It transpired that the lake had a slightly unpleasant odour in the summer, while the snow geese that nested close to my accommodation block were rather territorial and vicious. However, in terms of the course (Computer Systems and Software Engineering) I made absolutely the right choice. For those of you studying A-levels or T-Levels and planning to continue with computer science at university, I could not recommend a degree course more highly than the one at York.

Richard Hind (York, July 2022)

REFERENCES

[1] Jeremy Norman (2021) Blaise Pascal Invents a Calculator: The Pascaline <online> https://www.historyofinformation.com/detail.php?id=382

[2] University of Southampton (2019) Quarrying of Stonehenge 'bluestones' dated to 3000 BC <online> https://www.Southampton.ac.uk/news/2019/03/stonehenge-bluestones.page

[3] The Science Museum (No date) Difference Engine No.2, designed by Charles Babbage, built by Science Museum <online> https://collection.sciencemuseumgroup.org.uk/objects/co62748/babbages-difference-engine-no-2-2002-difference-engines

[4] Sydney Padua (No date) Who was Ada Lovelace? <online> https://findingada.com/about/who-was-ada/

[5] Electronics Notes (No date) What is a Vacuum Tube: Thermionic Valve <online> https://www.electronics-notes.com/articles/electronic_components/valves-tubes/what-is-a-tube-basics-tutorial.php

[6] The German Way & More (2021) Konrad Zuse <online> https://www.german-way.com/notable-people/featured-bios/konrad-zuse/

[7] IBM Archives (No Date) IBM's ASCC introduction (a.k.a. The Harvard Mark I) <online> https://www.ibm.com/ibm/history/exhibits/markI/markI_intro.html (Link no longer working)

[8] Andrew Hodges (1992) Alan Turing: The Enigma, Publisher: Vintage

[9] J.J. O'Connor and E.F. Robertson (2003) Mac Tutor: John von Neumann <online> https://mathshistory.st-andrews.ac.uk/Biographies/Von_Neumann/

[10] Tech Musings (2019) Retro Delight: Gallery of Early Computers (1940s - 1960s) <online> https://www.pingdom.com/blog/retro-delight-gallery-of-early-computers-1940s-1960s/

[11] D.C. Ince, Editor (1992) Mechanical Intelligence, Volume 1, Publisher: North Holland

[12] United States Census Bureau (2020) UNIVAC I <online> https://www.census.gov/history/www/innovations/technology/univac_i.html

[13] Electronics Notes (No date) Transistor Invention & History - when was the transistor invented <online> https://www.electronics-notes.com/articles/history/semiconductors/when-was-transistor-invented-history-invention.php

[14] Prof. Jim Austin (2018) The Computer Sheds <online> http://www.computermuseum.org.uk/

[15] Tim Youngblood (2017) Jack Kilby and the World's First Integrated Circuit <online> https://www.allaboutcircuits.com/news/jack-kilby-and-the-world-first-integrated-circuit/

[16] NASA (No date) The Apollo guidance computer: Hardware <online> https://history.nasa.gov/computers/Ch2-5.html

[17] Intel (No date) Intel's First Microprocessor, Its invention, introduction, and lasting influence <online> https://www.intel.co.uk/content/www/uk/en/history/museum-story-of-intel-4004.html

[18] National Museum of American History (No date) Altair 8800 Microcomputer <online> https://americanhistory.si.edu/collections/search/object/nmah_334396

[19] Mark Grogan (2018) HOW BRITAIN ADOPTED THE HOME COMPUTER OF THE 1980s <online> https://thecodeshow.info/how-britain-adopted-the-home-computer-of-the-1980s/

[20] IBM Archives (No date) The birth of the IBM PC <online> https://www.ibm.com/ibm/history/exhibits/pc25/pc25_birth.html (Link no longer working)

[21] Christoph Dernbach (No date) The History of the Apple Macintosh <online> https://www.mac-history.net/2021/02/10/history-apple-macintosh/

[22] Computer History Museum (2021) Xerox Alto <online> https://www.computerhistory.org/revolution/input-output/14/347

[23] University of York (2022) 50 years of Computer Science at York <online> https://www.cs.york.ac.uk/50-years/

Copyright 2020-2026, Richard Hind. All rights reserved.